Essentials¶

Play with Maestro Core basic concepts.

Core Data Objects (CDOs)¶

A core data object (CDO) is conceptually meant to combine all available information from both the hardware/storage side and the software/semantic side. As the most complete understanding that Maestro Core can obtain about a particular data object, the CDO is how applications communicate intentions with Maestro Core. The CDO typically represents real data and their physical location (if known).

A name is the minimal metadata needed for a valid CDO declaration, and eventual transfers:

mstro_cdo handle;

mstro_cdo_declare(handle, "my_cdo_name");

This allows Maestro Core to uniquely identify data objects.

Metadata¶

With just a name, transfers will just consists of minimal metadata. To specify actual data to be transferred, we are going to need a pointer and a size:

mstro_cdo_attribute_set(src_handle,

MSTRO_ATTR_CORE_CDO_RAW_PTR,

my_raw_pointer, ...);

mstro_cdo_attribute_set(src_handle,

MSTRO_ATTR_CORE_CDO_SCOPE_LOCAL_SIZE,

&my_data_size, ...);

At this point of the CDO definition the upcoming transfer will indeed consist of data and metadata. User-defined and layout metadata in particular may also be added.

Memory¶

Maestro relies internally on the Mamba library to manage CDO arrays in memory, in particular for Maestro-sided allocation – as opposed to user-provided allocation – and migration of array between memory layers. Mamba library is embedded in the Maestro core repo and can readily be used.

mstro_cdo dst_handle;

char* data; size_t len;

mmbArray* ma;

...

mstro_cdo_demand(dst_handle);

mstro_cdo_access_ptr(dst_handle, (void**)&data, &len);

mstro_cdo_access_mamba_array(dst_handle, &ma);

Users may access demanded CDOs pointer and length directly through the

mstro_cdo_access_ptr() function, or access the Mamba array prepared by

Maestro via mstro_cdo_access_mamba_array(). The obtained Mamba array may

then be iterated through with Mamba tile API for instance. See Mamba library docs for more information.

Layout¶

CDO core attributes also comprise layout attributes, that may be filled by producers and consumers

int64_t patt = ROWMAJ; // 0 for row-major, 1 for column-major

mstro_cdo_attribute_set(cdo,

MSTRO_ATTR_CORE_CDO_LAYOUT_ORDER,

&patt, ...);

Maestro core takes care of layout transformation that may be needed to accomodate layout attribute required on the consumer side, with respect to the producer-side layout attributes.

Distributed CDOs on the other hand are expressed via a Mamba layout

mmbLayout* dist_layout;

...

mstro_cdo_attribute_set(cdo, MSTRO_ATTR_CORE_CDO_DIST_LAYOUT, dist_layout, ...);

Note

All participants in a distributed CDO transfer use the same CDO name. Maestro core considers a distributed layout as a single CDO still.

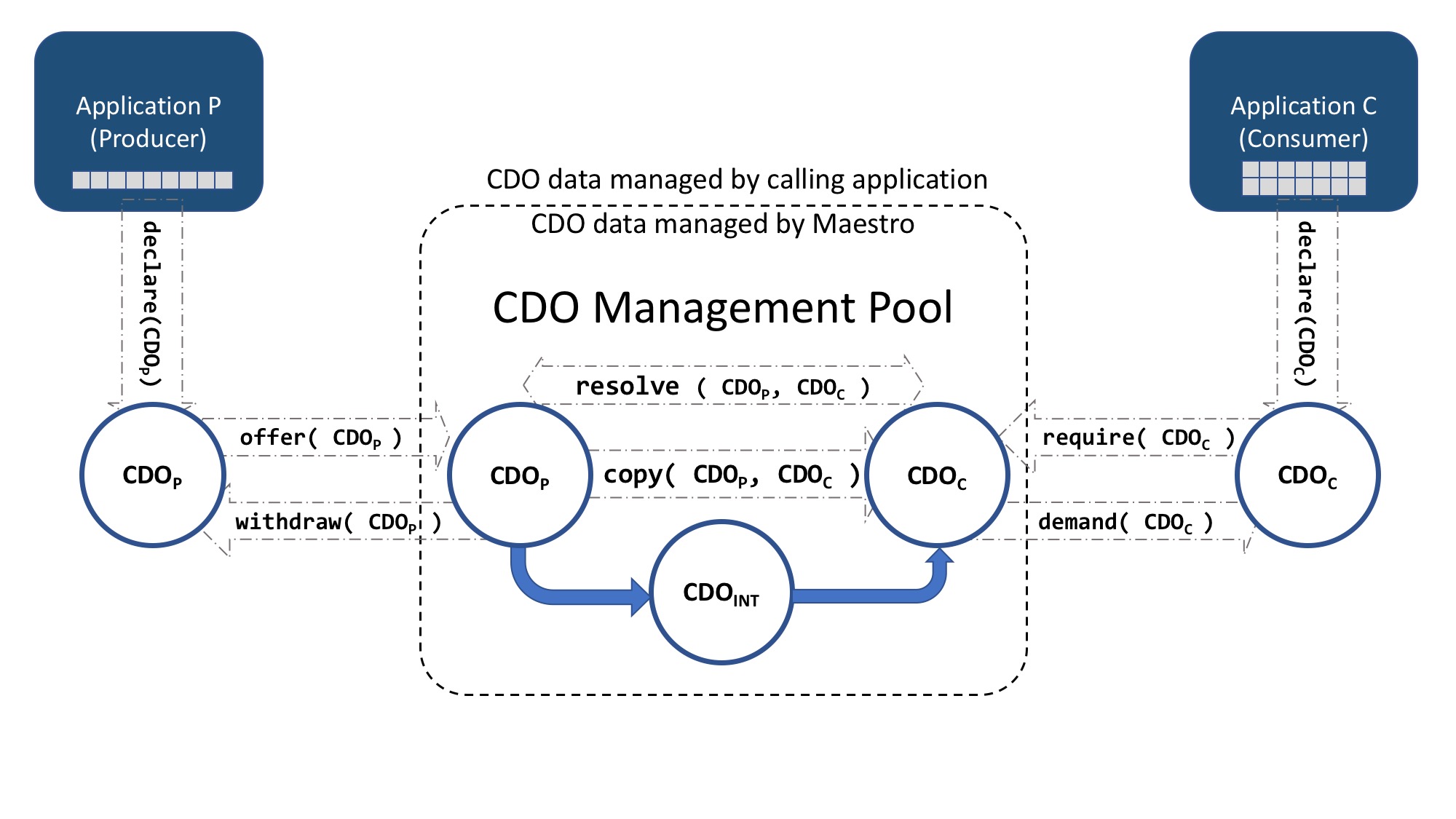

CDO Management¶

Once the metadata is set, the user may seal the CDO, meaning no more

metadata can be added, and metadata transport may be triggered later on.

mstro_cdo_seal(handle);

To make a CDO available for consumers, the user may offer it

mstro_cdo_offer(src_handle);

This puts the CDO into the Maestro Pool, which consists in the set of all

offered CDOs. At this point, the contract between the user and Maestro Core

is that the user may not access the CDO data memory region, which Maestro Core

can freely use for its own purposes.

mstro_cdo_withdraw(src_handle);

After, the user may withdraw the CDO, effectively removing the CDO from the

Maestro Pool. When withdrawn, the CDO data is guaranteed to be left

unscathed by Maestro.

On the consumer side, after seal the CDO may be required, which will

tell Maestro Core a given CDO is going to be ultimately needed by that

component, and Maestro Core may anticipate the transfer to have the CDO ready

for the consumer when demanded

mstro_cdo_demand(dst_handle);

At this point, the requested CDO (data and metadata) is available at dst_handle.

Finally, when the user is done using the CDO it can signal Maestro Core and liberate resources, except the CDO data unless preallocated by Maestro Core itself.

mstro_cdo_dispose(handle);

Pool Manager¶

Automatic multi-application rendezvous is implemented with the help of a dedicated pool manager component using Fabric, avoiding user selection of network interfaces, or administrative permissions/daemons.

For cross-application transport, a Pool Manager process must be running a

priori so that mstro_init() makes the network connection, this can be

invoked programmatically

mstro_init("my_workflow", "Pool_Manager", rank);

mstro_pm_start();

char *info = NULL;

mstro_pm_getinfo(&pm_info);

...

mstro_pm_terminate();

mstro_finalize();

This solution makes the current process become the Pool Manager process. Alternatively, a Pool Manager application is also built-in with maestro-core install and can be used as such

./tests/simple_pool_manager

With this second solution, the Pool Manager prints information (pm_info

here) which contains a list of endpoints it wishes to be contacted to by

workflow components, and that may be read by a script and set as the

environment variable MSTRO_POOL_MANAGER_INFO which is required for

components to be able to connect to the Pool Manager at mstro_init() time.

Typically

export MSTRO_WORKFLOW_NAME="my_workflow"

PM_CMD="../tests/simple_pool_manager"

exec 3< <(env ${PM_CMD})

read -d ';' -u 3 pm_info_varname

read -d ';' -u 3 pm_info

export MSTRO_POOL_MANAGER_INFO="$pm_info"

(env MSTRO_COMPONENT_NAME="C1" ${CLIENT_CMD})

(env MSTRO_COMPONENT_NAME="C2" ${CLIENT_CMD})

which launches a Pool Manager, reads its info output, and makes this required info available via env for Maestro Core in the clients to be launched.

Transport¶

CDO transport is decoupled from the pool operations, which means CDO offer resolution and metadata transfer are decoupled from the actual CDO content transfer. It makes the pool operations fast, and independent of CDO storage resource handling, transport methods, and layout transformations.

Note

This also means the Pool Manager decides when to perform the actual transport,

mstro_cdo_offer() merely offers the CDO to the Pool and doesn’t do transfer

in itself, and neither does mstro_cdo_demand() trigger necessarily any

transfer, ideally the Pool Manager anticipates the transfer thanks to the

mstro_cdo_require call.

Maestro Core default transport is OFI (RDMA), whose provider can be set with

FI_PROVIDER environment variable. Default transport can be overridden

setting the MSTRO_TRANSPORT_DEFAULT environment variable to “GFS” for file

system transport or “MIO” for object store transport. MIO is Maestro Core

interface to object stores, which has one backend that is the cortx-motr object store.

Events¶

Maestro Pool events may be monitored by Maestro Core clients, in particular CDO events such as the ones produced internally by each of the CDO management API function calls, and that allow the Pool Manager to keep track of the proceedings. Such events may be filtered using a selector

mstro_cdo_selector selector=NULL;

mstro_cdo_selector_create( ..., "(has .maestro.core.cdo.name)",

&selector);

Subscribing to events is done with

mstro_subscription cdo_subscription=NULL;

mstro_subscribe(selector,

MSTRO_POOL_EVENT_OFFER,

..., &cdo_subscription));

A subscription may combine different events types such as

MSTRO_POOL_EVENT_OFFER shown here using a |

Then events may be polled

mstro_pool_event event;

mstro_subscription_poll(cdo_subscription, &event);

Note

mstro_subscription_poll() returns in event a list of events, where event is the head and where the next element is event->next.

and inspected for CDO properties

const char *cdo_name=NULL;

switch(event->kind) {

case MSTRO_POOL_EVENT_OFFER:

cdo_name = event->offer.cdo_name;

...

Groups¶

Group allow CDO batch handling, where group members (the CDOs) may be added by name or handle

mstro_group_declare(g_name,&g_prod);

mstro_group_add_by_name(g_prod, c_names[i]);

mstro_group_add(g_prod, cdo_handles[i]);

Then groups can be offered and demanded similarly to CDOs by

mstro_group_offer() and mstro_group_offer(), although the semantics

differ for the latter, in that only the metadata is transferred, so the group

elements may be inspected

mstro_group_size_get(g_cons, &count);

mstro_cdo c;

for(size_t idx=0; idx<count; idx++) {

mstro_group_next(g_cons, &c);

assert(NULL!=c);

mstro_cdo_dispose(c);

}

before being potentially individually required.

Note

All group participant must know all CDOs, at least their names

Consumer must know all CDO names at least